The selected topic is Using NeRF to Construct Animatable Musicians. Our goal is to input a piece of music. Our system will synthesize novel views of the person playing the instrument. This results in a novel view, novel pose, and mixed reality video. To achieve our goal, we have utilized a recently popular 3D novel view synthesis technique - Neural Radiance Field (NeRF).

Creating human animations involves manual labor. Many people utilize computer graphics tools for this task. Inspired by Scream Lab's work at NCKU, we aim to construct virtual players. However, the practical process is complex, requiring multiple software tools like Native Access, Reaper, and Unity. Additionally, various programming languages and manual operations are necessary. Using Unity for animation may result in less realistic visuals. We propose using NeRF and simplifying the pipeline with Python and related libraries to address this.

Create

a system to input only music and output novel view video with a specific person

playing instruments without the need for CG tools

Input: Music and preprocessed human pose

Output: Novel view of a musician playing instruments with music synchronized with body poses

We aim to develop a system that transforms a monocular video of a moving human and a musical piece into a realistic, animatable human model. The system will generate a video featuring a virtual musician that closely resembles the human in the input video. This virtual musician will be seen playing an instrument, matching the provided music.

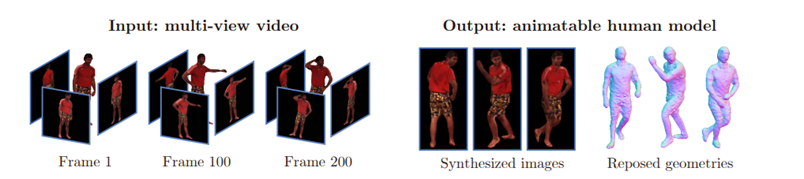

To create a realistic human model, we utilize NeRF, a model known for generating lifelike images of reconstructed 3D models from various perspectives. Considering our constrained filming and computing resources, we opted for InstantAvatar, an open-source animatable human reconstruction model.

InstantAvatar

, built on NeRF principles, facilitates straightforward training using monocular video input, requiring relatively short training and rendering time for photos.

To animate the virtual musician so that it plays notes from the given piece of music with the correct tempo, we implemented a keyframe algorithm to reduce labor costs. We then add the instrument into the scene using the Z-buffer algorithm and, finally, output a novel view video of our virtual musician playing the given piece of music.

pipelineTianjian Jiang, Xu Chen, Jie Song, Otmar Hilliges, ”InstantAvatar: Learning Avatars from Monocular Video in 60 Seconds”, In CVPR, 2023

link