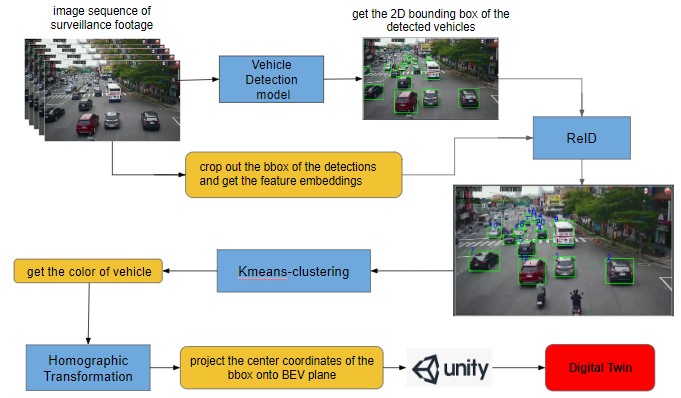

Workflow

Perform vehicle detection

First, we convert the input surveillance camera footage into a sequence of images at a frequency of 10Hz. With the input video being nearly 50 seconds long, this results in 490 input images. Next, we use a pre-trained YOLOv3 model to detect objects in each frame.

Vehicle ReID and Tracking

ReID:Before conducting object tracking, it is crucial to perform ReID (Re-Identification) for each vehicle. Here, we utilize ResNet for ReID, primarily focusing on feature extraction.

Tracking:Each frame can be categorized into Matched Tracks, Unmatched Tracks, and Unmatched Detections. Each frame updates the age of the tracks, and if the age of an Unmatched Track exceeds the defined max_age, it implies that the vehicle has disappeared, and this track can be discarded. Moreover, if the score of an Unmatched Detection exceeds a certain threshold, a new track will be created for that detection.

Using Kmeans to detect color

We will crop each bounding box, allowing us to individually identify the dominant color within the region of each cropped vehicle. This approach ensures that the color detection process for one vehicle's area does not interfere with the detection of colors for other vehicles. We set the number of clusters to 3 for this clustering process.

Homographic Transformation

As our goal is to derive the digital twin solely from a single angle of the surveillance camera without requiring additional intrinsic or extrinsic parameters of the camera, existing methods to determine the transformation matrix cannot be employed. The implementation details involve initially identifying the satellite imagery of the surveillance camera location. Then, we mark the features appearing concurrently in both the video footage and the satellite image, such as zebra crossings, motorcycle waiting areas, road markings, and so on.