Abstract

Skeleton-based action recognition is pivotal for modern fitness apps but often fails when users change their viewing angle. FIAS addresses this by introducing a robust, mixed-view training strategy. Our system not only achieves state-of-the-art accuracy but also integrates a novel Explainable AI (XAI) pipeline. By combining Grad-CAM visualizations with Large Language Models (LLM), we transform complex model data into human-readable, expert-level coaching advice.

The Challenge: Viewpoint Variance

Human Action Recognition (HAR) has become a pivotal technology in fitness applications. However, a significant challenge is viewpoint variance. A model trained on exercise data captured from a single perspective often fails when the user's orientation changes.

Common solutions like lifting 2D skeletons to 3D often introduce noise and estimation errors. FIAS addresses this by systematically exploring the relationship between input dimensionality and training strategies, proving that a mixed-view 2D strategy is robust and effective.

Key Contributions

- Exhaustive cross-view generalization analysis.

- Demonstrated that 2D dynamic features outperform complex 3D models.

- Introduced an XAI-LLM framework for human-centric feedback.

Custom Dataset & Preprocessing

Recognizing the limitations of existing public datasets, we curated a custom video dataset comprising approximately 1,600 video clips of three foundational actions: lunges, squats, and push-ups.

Data Composition

We defined nine distinct classes plus an idle class, categorizing actions into "correct" forms and specific error variants:

Viewpoint Control

Data was rigorously captured from diverse angles to test generalization:

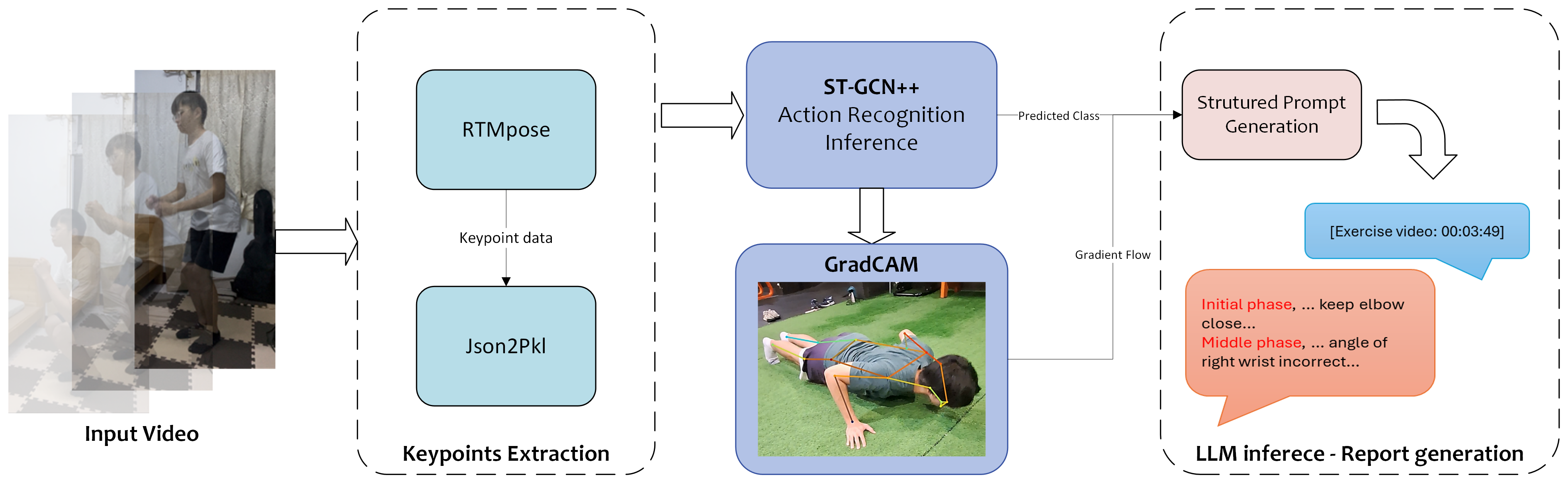

System Pipeline

An end-to-end framework from raw video to personalized advice

Figure 1. The overall architecture of the FIAS pipeline.

1. Pose Estimation

Extracts high-quality 2D skeleton keypoints from raw video frames using the RTMPose model.

2. Action Recognition

Uses ST-GCN++ to classify actions while simultaneously generating Grad-CAM saliency maps to identify error points.

3. LLM Coaching

Synthesizes biomechanical evidence into a structured prompt, allowing the LLM to generate readable advice.

Performance Results

| Modality | Test Accuracy (Top-1) | |

|---|---|---|

| Single-View | Mixed-View (Ours) | |

| 2D Joint | 60.23% | 95.32% |

| 2D Joint Motion | 53.80% | 96.49% |

| 2D Bone Motion | 61.40% | 96.49% |

| 3D Joint (Baseline) | 52.63% | 81.29% |

Table1: Comparison showing Mixed-View 2D models significantly outperform Single-View and 3D baselines.

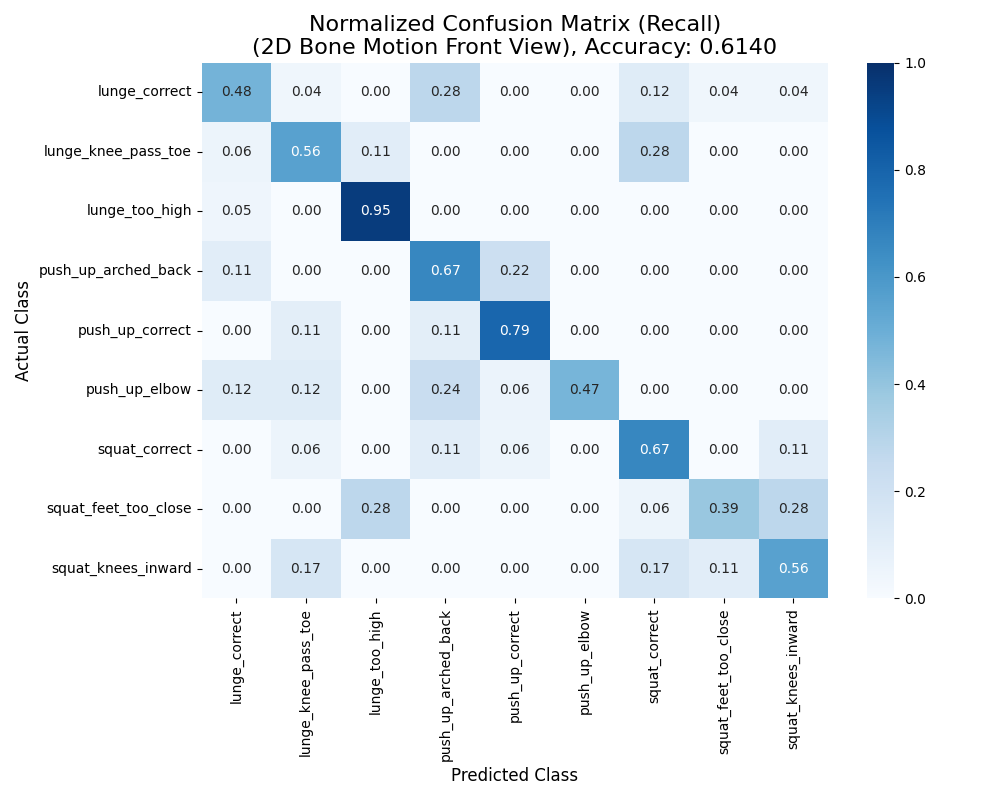

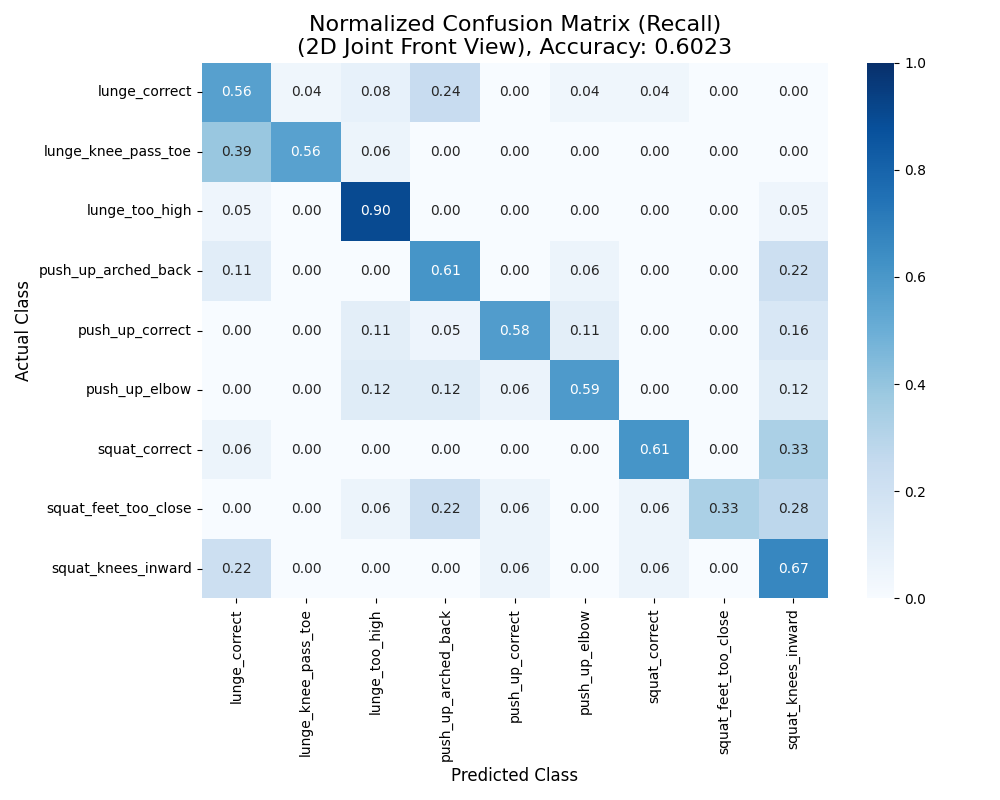

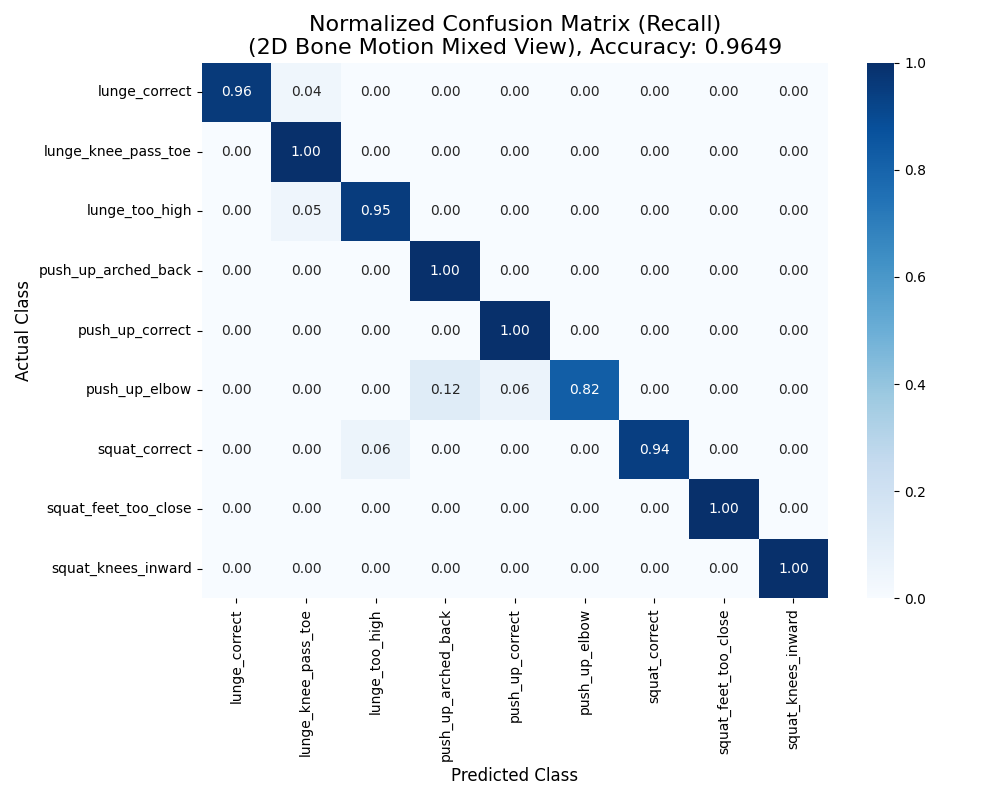

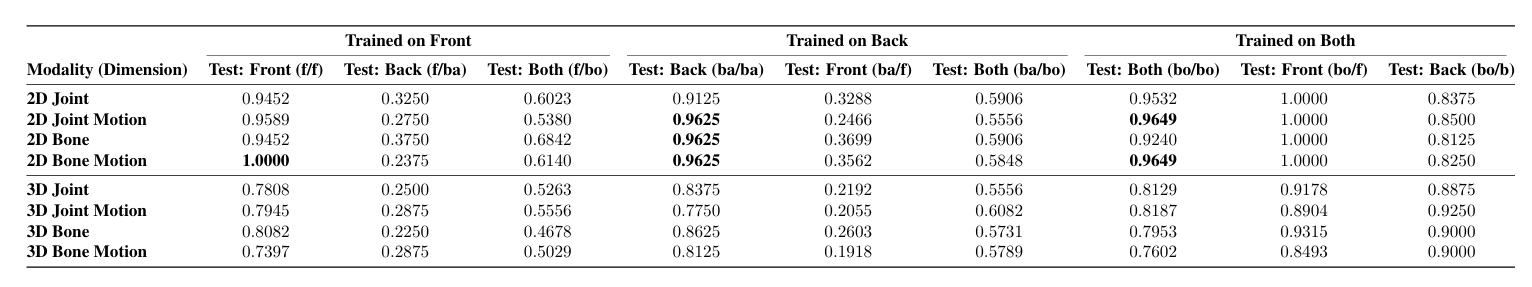

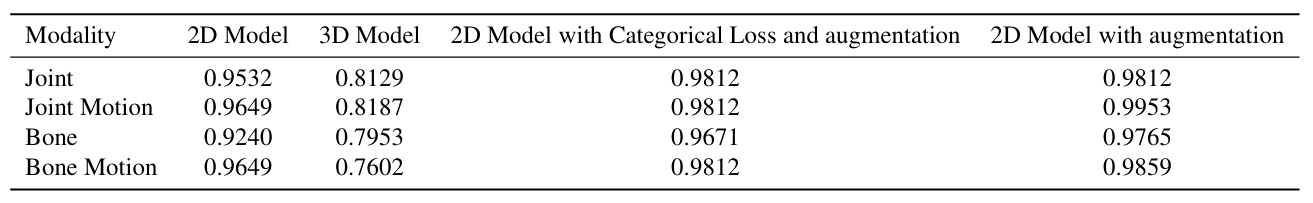

We conducted extensive experiments comparing 2D vs. 3D data and Single-View vs. Mixed-View training strategies. The results conclusively show that 2D data with diverse viewpoints is superior.

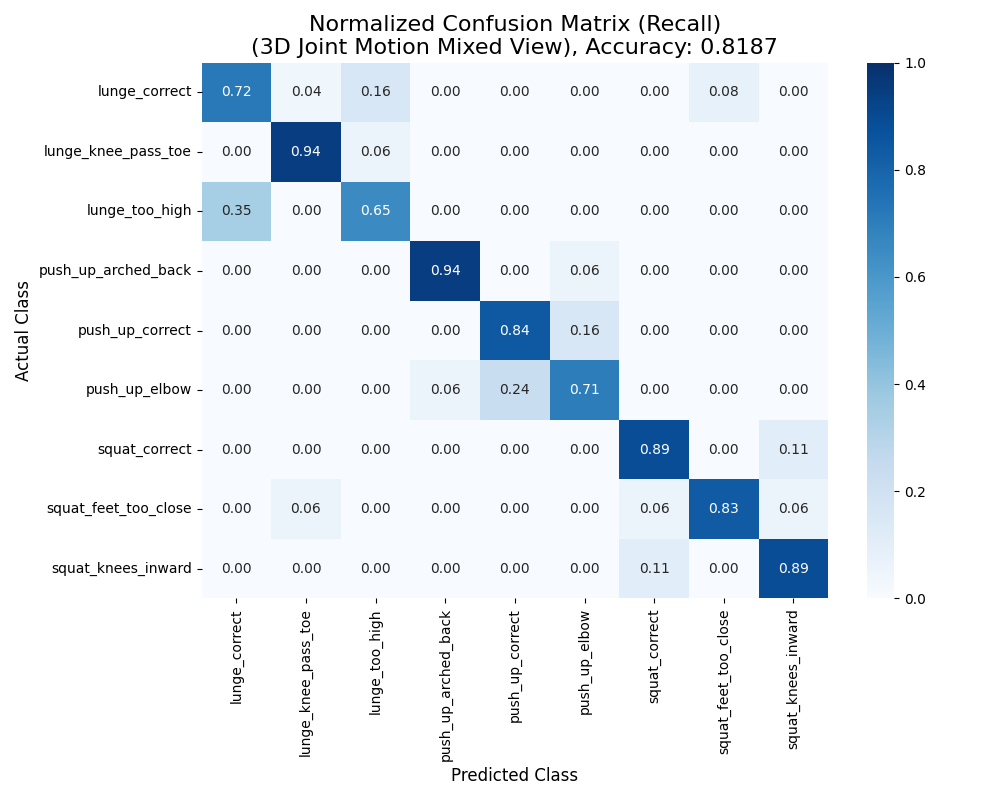

Fig 2a. Single-View

Fig 2b. Baseline

Fig 2c. Best Overall (Mixed)

Fig 2d. Best 3D Model

Figure 2. Comparison of Confusion Matrices. Note the superior diagonalization in Fig 2c.

Table 2. Cross-View Generalization Results (Top-1 Accuracy). This table compares the performance of different modalities when trained on front, back, or both views, and tested across all three conditions. The highest accuracy in each primary test column (f/f, ba/ba, bo/bo) is highlighted in bold.

Table 3. Comparison of Model Accuracy Across Different Input Modalities and Augmentation Strategies. Our augmentations (Random Rotation, Random Scale) were the primary driver of high accuracy. As shown in Table 2, augmentations alone boosted our 2D models to near-perfect Top-1 accuracy (e.g., 99.53% on Joint Motion).

Demonstration

Explainable AI Coaching

How we turn "Black Box" predictions into "White Box" advice.

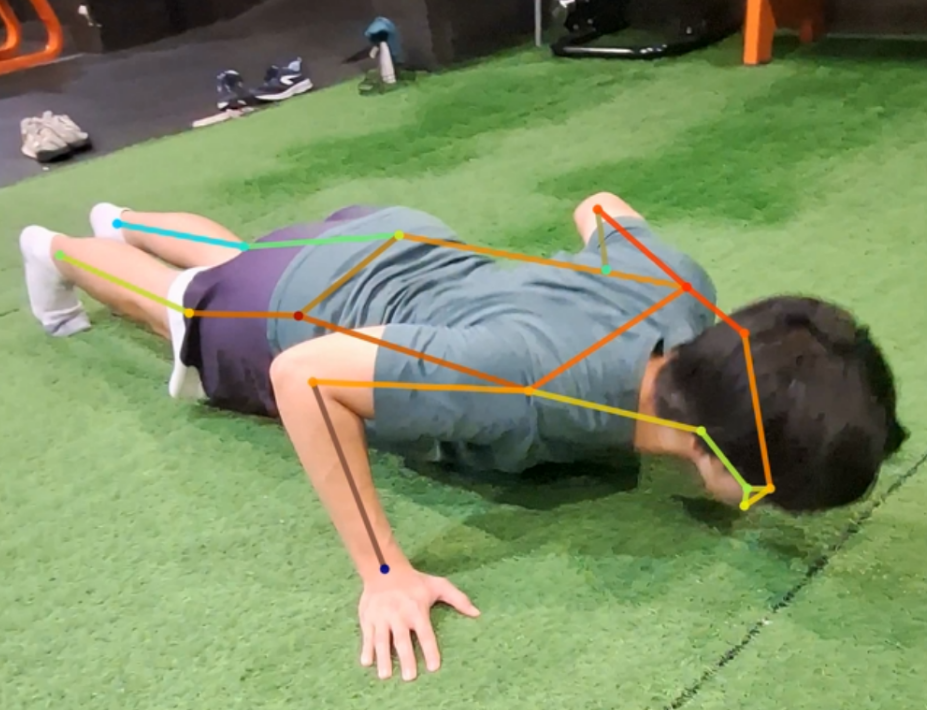

Visualizing the "Why"

In our case study for push-ups, the model correctly identified an "incorrect elbow position." The Grad-CAM heatmap (left) highlights high activation on the wrists and shoulders, pinpointing the compensatory strain.

Figure 3. Grad-CAM visualization identifying stress points.

Detailed AI Coach Feedback Example (Squat)

--- LLM PROMPT ---

The AI model's final prediction for the entire video is 'squat_correct' with an overall confidence of 99.73%.

Based on the model's attention (Grad-CAM) data across the video, here is a summary of key observations:

1. Overall Most Critical Body Parts: Left Shoulder, Right Shoulder, Right Hip.

2. The Most Critical Moment: At frame 23, the model's peak attention (0.00) occurred at the 'Left Shoulder'.

3. Attention Shift Over Time:

- Early Phase (frames 0-16): Primarily focused on the 'Left Hip'.

- Middle Phase (frames 16-32): Primarily focused on the 'Left Shoulder'.

- Late Phase (frames 32-50): Primarily focused on the 'Left Wrist'.

--- TASK FOR THE LANGUAGE MODEL ---

You are an expert AI fitness coach. Based on the sequence of actions and the attention data for the main action 'squat_correct', provide a concise analysis of the user's performance. Your analysis should:

1. Briefly summarize the sequence of actions performed by the user. Note any changes or inconsistencies in form (e.g., 'The user started with a correct squat but then their form degraded into squat_knees_inward').

2. Infer the reason for the model's attention patterns. For example, 'The model focused on the knees and hips, which is where form breakdown typically occurs in an incorrect squat.'

3. Provide clear, actionable advice for correction. For instance, 'To correct this, focus on pushing your knees outward, as if you are trying to spread the floor apart with your feet.'

4. Keep the tone encouraging and professional.

--- AI COACH FEEDBACK ---

I've reviewed your squat performance, and overall, you're doing a great job. Your form is strong, and you're maintaining a consistent posture throughout the exercise. However, I did notice a few areas where we can make some adjustments to take your squat to the next level.

It looks like you started with a solid foundation, but as you descended into the squat, your left shoulder and hip became slightly misaligned. This might be causing a subtle shift in your weight distribution, which could potentially lead to discomfort or injury over time.

I think the reason for this discrepancy is that you might be relying too heavily on your shoulders to stabilize your body, rather than engaging your core and maintaining a neutral spine. This is a common issue, and it's easy to correct with a bit of practice and awareness.

To improve your form, I recommend focusing on keeping your shoulders relaxed and down, and instead, engaging your core muscles to support your body. Imagine you're drawing your belly button towards your spine as you lower into the squat. This will help you maintain a stable, upright posture and reduce the strain on your shoulders.

Additionally, take note of your left wrist and hip position as you move through the exercise. Try to keep your wrist straight and your hip aligned with your knee, rather than letting it shift forward or backward. This will help you maintain balance and generate power from your legs, rather than your upper body.

Remember, practice makes perfect, and it's all about developing good habits and being mindful of your body position. You're off to a great start, and with a few tweaks, you'll be squatting like a pro in no time. Keep up the good work, and don't hesitate to reach out if you have any questions or need further guidance!