BEV-Seg-Net is based on the 2D projection method, which first projects the 3D point cloud into the 2D bird's-eye view, and then maps the semantic segmentation classification back to the 3D point cloud. Although the 2D projection method is prone to lose geometric information, however, due to computational power and storage space considerations, the 2D projection is more efficient than the 3D-based and point methods are more efficient.

In order to handle the huge amount of data in SensatUrban, the BEV projection was divided into three steps.

3D-to-BEV projection, sparse BEV images completion, and BEV-to-3D remapping.

3D-to-BEV projection :

In each step, the points in the sliding window are mapped, and then the mapped points are deleted to reduce the amount of data processing.

Sparse BEV image :

Complete images by Maxpooling.

BEV-to-3D remapping:

Save the absolute coordinates of each sliding window and extract the original point cloud output for 2D semantic segmentation.

The Encoder-Decoder Network Unet is used as a base, which consists of four encoders and five decoders, two of which are ResNet-34.

Each convolutional block has a Batch-Normalization Layer and a Rectified Linear Unit Function (ReLU Layer) .

The multimodal network relies on the Feature Communication of the different layers. Here, a flexible multi-stage fusion network is used to enable multi-pipeline data fusion.

The more important channels are selected by the attention layer. Finally, a 1x1 convolution is added to reduce the dimensionality and maintain a constant output shape.

We mainly process with the BEV images.

Due to the hardware limitation of the GPU, it is difficult for us to use the original size in the paper.

Therefore, we tried three sizes, 100x100, 200x200 and 300x300.

The different sizes of the input images have a great influence on the batch size and the training time.

The original input images use only R, G, and B channels.

Because the BEV resulted in the lack of difference of height between the objects in the 3D space.

Therefore, we added a fourth channel, altitude.

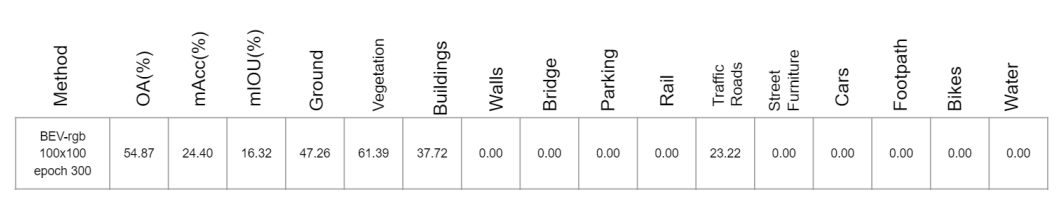

We set image size to 100*100, train with only rgb channels in 300 epochs as our baseline.

The output is below :

Since the performance of using only rgb is not exceed our expectation, so we add height as fourth channel.And the results are shown below.

created with

Website Builder Software .